MCP Servers: What They Are and Why Organisations Need Them

October 2, 2025

What is an MCP server?

“MCP” stands for Model Context Protocol — an open standard introduced in late 2024 to unify how AI systems (especially large language models, or LLMs) interface with external tools, systems, and data sources.

An MCP server acts as a bridge. It exposes capabilities (like database queries, file storage, API calls, or domain-specific logic) in a standardized way so AI agents (the clients) can call them consistently and securely.

Examples include:

- A GitHub MCP server that lets an AI list open pull requests or create a branch.

- A file-system MCP server that allows “read this file” or “write this file.”

- A custom MCP server that integrates with internal CRMs, ERPs, or analytics systems.

Because MCP is a standard, multiple AI hosts and tools can share the same integrations, avoiding duplicated work.

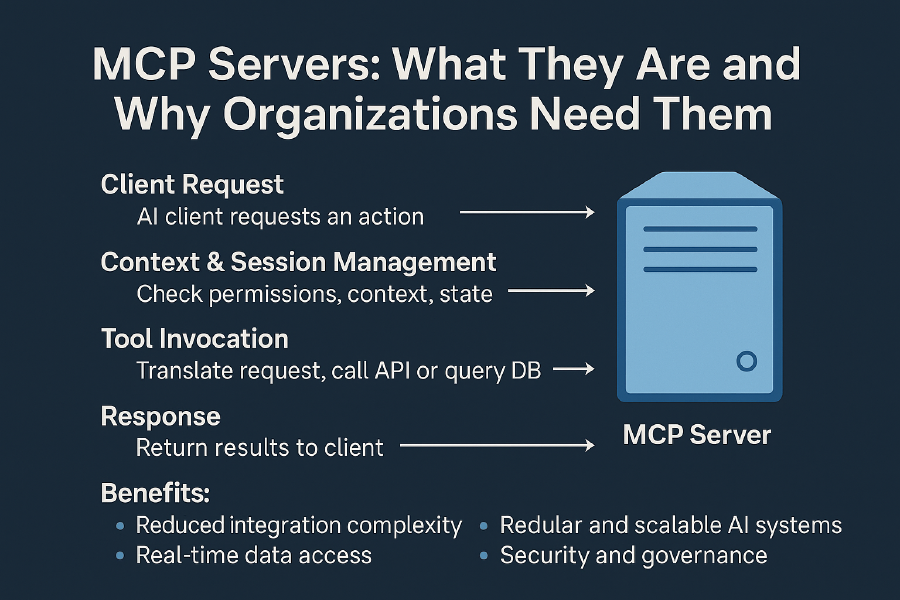

How MCP servers work

At a high level:

- Client request → The AI client (agent, chatbot, LLM wrapper) asks the MCP server to perform an action.

- Context & session management → The server checks permissions, context, and state.

- Tool invocation → The server translates the request into an API call, query, or internal action.

- Response → Results are returned to the client, along with logs or metadata.

- Chaining → Complex requests can be orchestrated across multiple tools.

The AI agent doesn’t need bespoke connectors—it just speaks MCP.

Why organisations need MCP servers

1. Reduced integration complexity

One connector per system instead of many custom integrations.

2. Modularity and scalability

Add new tools as standalone MCP servers, plug them in as needed.

3. Real-time data access

LLMs can use live, up-to-date information instead of stale training data.

4. Vendor independence

Re-use your MCP servers across multiple AI platforms and future-proof your stack.

5. Security and governance

Centralise access control, auditing, and logging at the MCP layer.

6. Faster development cycles

Deploy new AI-enabled features more quickly by reusing MCP modules.

7. Industry adoption

Microsoft, OpenAI and others are already integrating MCP support into their ecosystems.

Risks and challenges

- Security risks: If misconfigured, MCP servers can expose sensitive systems.

- Operational overhead: Requires development, deployment, and monitoring.

- Versioning: Protocol and API changes must be managed.

- Latency: Complex chaining may introduce delays.

- Supply chain risks: Malicious third-party MCP servers could compromise data.

Mitigation strategies include least-privilege access, code audits, monitoring, and preferring trusted or in-house implementations.

Use cases

- Developer tools: GitHub or Jira MCP servers enable AI agents to manage issues and PRs.

- Enterprise systems: Internal CRMs, ERPs, or BI tools exposed via MCP for decision support.

- Knowledge access: Document retrieval and summarisation from internal knowledge bases.

- Automation: Orchestrating multi-step workflows across different systems.

Why invest now

Without MCP, every AI assistant needs bespoke connectors. With MCP, you build integrations once, reuse everywhere, and enforce consistent governance.

Organisations adopting MCP today will be able to scale AI integrations faster, more securely, and with lower long-term costs.